Have you ever wondered about a powerful tool in the world of words, perhaps something that sounds a bit like "bert mccracken" when you hear it mentioned? Well, it's not a person, not a rock star, or someone you might meet on the street, but it is a really big deal for how computers get a grip on human language. This particular "Bert" is a clever system, something that helps machines make more sense of what we write and say, a truly important step forward in that whole area.

This special helper, which people call BERT, actually got its start not too long ago, back in October of 2018. Some smart folks at Google put it out there for everyone to see. It's a kind of model, you see, one that learns to look at written words and turn them into something a computer can work with, almost like giving each word a special code or a unique way of being seen. It's all about helping computers "read" and "think" about text in a much better way than they used to, so it's almost like a new pair of glasses for them.

What this system does, is that it really changes how we approach tasks that involve language. It is famous for its ability to consider the bigger picture, the way words fit together. This means when a computer uses BERT, it's not just looking at one word at a time, but rather the whole collection of words around it, which gives it a far better sense of what's really being talked about. It's a pretty big step for making machines more helpful with our everyday language needs, you know, making things clearer for them.

Table of Contents

- What is This "Bert" We're Talking About, like the one in "bert mccracken"?

- How Does This "Bert" Helper Work?

- What's So Special About This "Bert" Approach?

- Can "Bert" Really Fill in the Blanks, like "bert mccracken" might fill in a missing lyric?

- Why Does "Bert" Matter for Language Tasks?

- How Has "Bert" Made Things Better?

- Where Can We See "Bert" in Action?

- What Does "Bert" Do With Input and Output Text?

What is This "Bert" We're Talking About, like the one in "bert mccracken"?

When we talk about "Bert," especially in the way it sounds a little like "bert mccracken," we are actually referring to a specific kind of computer program, a very smart one that helps machines understand words. This program, or model as it is called, first showed up in October of 2018. It was put together by some very bright people who work at Google. They created it to help computers get a better grasp of human language, which is a pretty big challenge, you know, because our language has so many twists and turns. It's a system that learns to take written words and represent them in a special way, almost like giving them an internal picture or a unique signature that the computer can easily work with. This means that when a computer looks at text, it doesn't just see a jumble of letters; it sees something that has meaning, thanks to Bert's way of setting things up. It’s a very important piece of the puzzle for making computers smarter about what we write and say, in a way, really helping them connect with our words.

Here are some key facts about this language helper:

| Birth Date | October 2018 |

| Creators | Researchers at Google |

| Main Purpose | To help computers understand human language better |

| Key Ability | Looks at words from both directions to get their meaning |

| What it Does | Learns to make text into a sequence that computers can process |

How Does This "Bert" Helper Work?

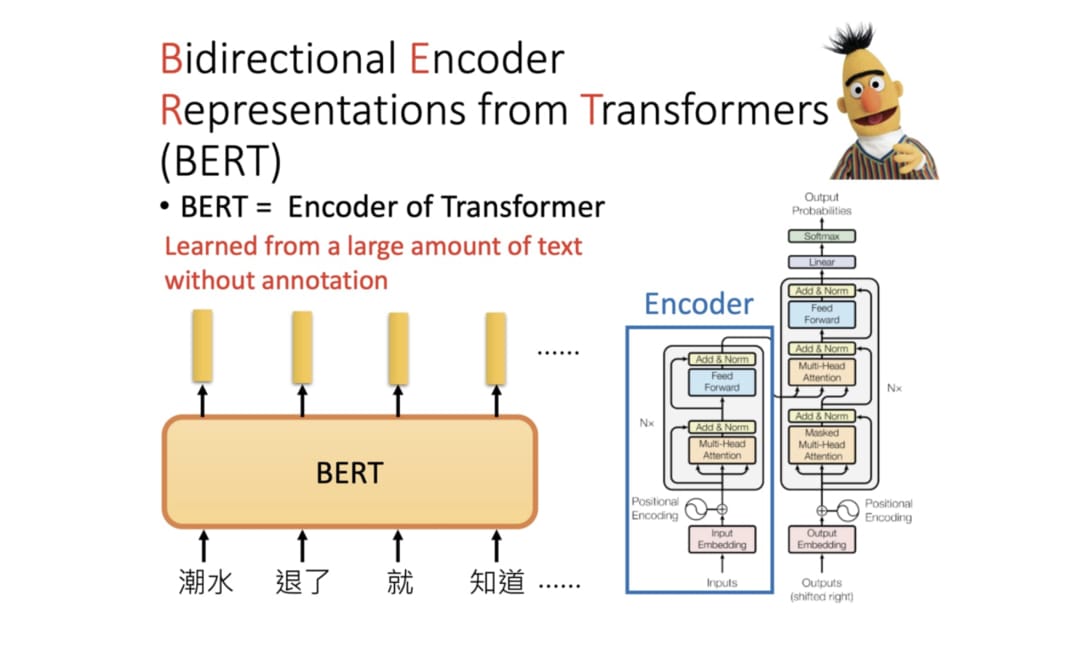

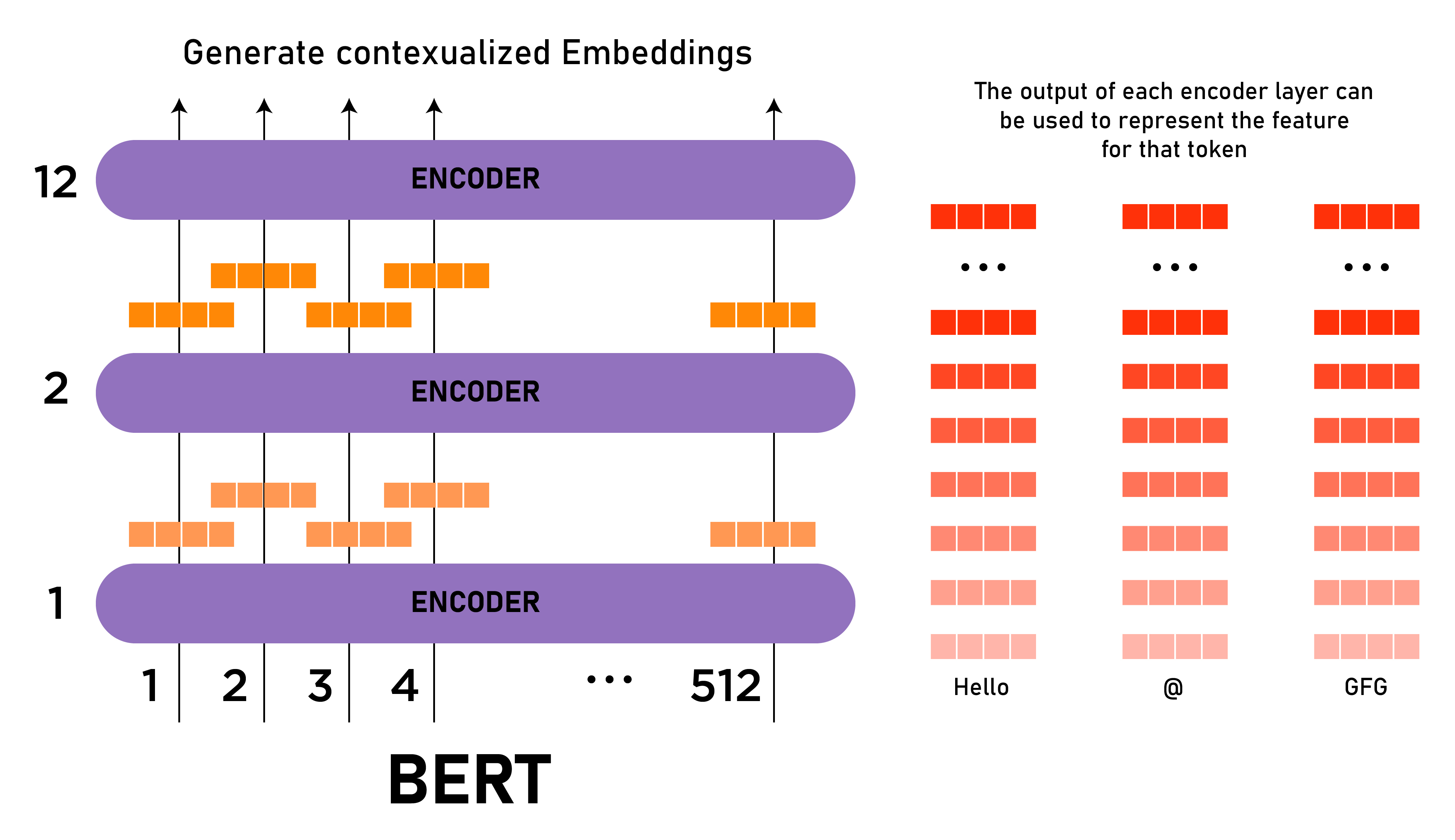

The name "Bert" actually stands for something quite long: Bidirectional Encoder Representations from Transformers. Now, that sounds a bit like a mouthful, doesn't it? But really, it just describes how this system goes about its work. The "bidirectional" part is pretty important. It means that when Bert looks at a sentence, it doesn't just read it from left to right, like we usually do. Instead, it looks at the words from both directions, from the start to the end, and from the end back to the start, too. This way, it gets a much fuller picture of what each word means in that particular group of words. It’s almost like having two sets of eyes, one looking forward and one looking backward, to truly grasp the whole idea. This approach helps it understand the connections between words that might be far apart in a sentence, which is something older computer programs struggled with. It's a pretty clever way to get a complete sense of the words, in some respects.

The "encoder representations" part just means that Bert turns the words it reads into a special kind of code or representation that computers can use. Think of it like taking a picture of the words, but instead of a visual picture, it’s a data picture that holds all the important information about those words. This coded version is what the computer then uses to do its tasks. The "transformers" part refers to a specific kind of setup, a particular design, that this system uses to process all this information. It's a very effective way to handle the flow of data, allowing Bert to be really good at its job. This design is what gives Bert its special ability to look at a lot of words at once and figure out how they relate to each other, you know, making it quite powerful.

What's So Special About This "Bert" Approach?

What makes this "Bert" system truly stand out is its knack for understanding context. You see, words can mean different things depending on the other words around them. Think about the word "bank." It could mean the side of a river, or a place where you keep your money, or even to tilt an airplane. Older computer programs often had trouble telling the difference, but Bert is much better at it. It's because it looks at the whole sentence, or even a bigger piece of text, to figure out what a word really means in that particular situation. This ability to consider the entire picture, rather than just isolated words, makes it far more accurate when it tries to understand what someone is trying to communicate. It's a bit like a person who can read between the lines, getting the full gist of what's being said, which is actually quite a remarkable skill for a computer to have, really.

This way of looking at things, where it considers the surrounding words, is what makes Bert so effective for so many different language-related jobs. It doesn't just memorize definitions; it learns how words behave when they are together. This means it can pick up on subtle hints and connections that other systems might miss. For instance, if you say "I went to the bank to deposit money," Bert would know you mean the financial institution, not the river's edge. This deep level of understanding is what allows it to perform tasks like answering questions or summarizing text with a much higher degree of correctness. It's quite a leap forward in how machines process and react to our everyday conversations and writings, you know, making them seem more intelligent in their responses.

Can "Bert" Really Fill in the Blanks, like "bert mccracken" might fill in a missing lyric?

One of the cool things Bert can do, and it's a good way to show how it works, is to predict a word that's missing from a sentence. Imagine you have a sentence, and one word is hidden, perhaps marked with something like "[mask]". Bert can look at the words around that hidden spot and make a very good guess about what word should go there. This is a bit like how you might be able to finish a sentence if someone pauses mid-way, because you understand the flow of the conversation. For example, if you have "The cat sat on the [mask]", Bert would very likely suggest "mat" or "rug" because it understands how those words usually fit together in that kind of setup. This ability to fill in the blanks is a key part of how it learns to understand language so well. It's like a practice exercise for it, helping it get better at recognizing patterns and meanings in words, you know, making it very good at figuring things out.

The process of predicting these missing words is not just a party trick; it's how Bert gets so smart about language in the first place. By doing this task over and over again with huge amounts of text, it builds up a vast knowledge of how words are used in different situations. It learns which words tend to appear together, and which words make sense in particular contexts. This practice helps it to create those special "representations" of text that we talked about earlier. So, when you see an example of Bert predicting a "[mask]" token, you're actually seeing a small piece of the fundamental way it learns to grasp the meaning of human communication. It's a pretty neat way to train a computer, isn't it? It helps it to really get a handle on the nuances of language, making it quite a capable helper.

Why Does "Bert" Matter for Language Tasks?

Bert matters a great deal for tasks that involve natural language processing, often called NLP for short. This is the area where computers try to understand, interpret, and work with human language. Before Bert came along, these tasks were often quite difficult for computers to do well. They might understand individual words, but struggled with the bigger picture, with the subtle meanings and connections that make our language so rich. Bert changed that significantly. Because of its ability to look at words in context and from both directions, it